Top Posters

Newest Members

-

1. garfield2142

1. garfield2142Joined: févr. 02 2026 09:15

-

2. Lerochem

2. LerochemJoined: janv. 28 2026 12:34

-

3. Pickthor

3. PickthorJoined: janv. 25 2026 07:40

-

4. Polytech Lille

4. Polytech LilleJoined: janv. 22 2026 02:17

-

5. Furyoisivie

5. FuryoisivieJoined: janv. 13 2026 11:20

Recently Added Posts

-

Formation : conception de PCBs pour résister aux décharges ESD

Formation : conception de PCBs pour résister aux décharges ESDSandro - févr. 23 2026 06:26

Bonjour,Est-ce que quelqu'un connait une bonne "formation" pour la conception de mesures de prote...

-

Tournoi mini sumos en Isère

Tournoi mini sumos en Isèrepmdd - févr. 14 2026 09:46

ROBOT'TOUR ! Ca claque ! C'est un super tournoi qui vise l'international ! Venez nombr...

-

Tournoi mini sumos en Isère

Tournoi mini sumos en IsèreGédé - févr. 13 2026 10:28

L'affiche 2026 est sortie! :D Une nouveauté: le tournoi s'appellera dorénavant...

-

Naissance de mon Sumo

Naissance de mon Sumopmdd - févr. 12 2026 10:35

Bonsoir, Après cette petite diversion liée à l'IA :Koshechka_08: , je re...

-

Engrenages sans intérêt apparent

Engrenages sans intérêt apparentForthman - févr. 09 2026 10:53

Bonsoir, la chaîne youtube "e-penser 2.0" vient de sortir une vidéo sur la 3eme lo...

Formation : conception de PCBs pour résister aux décharge...

Bonjour,

Est-ce que quelqu'un connait une bonne "formation" pour la conception de mesures de protections contre les ESD (décharges électro-statiques) au niveau du PCB?

N'importe quel format me convient : cours en présentiel (en France), cours en visio, cours en ligne (gratuit ou payant), vidéos, un bon livre, ... En français ou en anglais. Pas de problème si c'est payant (un truc gratuit me convient bien sûr aussi).

Pour l'instant, je n'ai pas trop eut de succès dans mes recherches :

- je trouve beaucoup de formations sur les mesures de protection ESD à appliquer sur le lieu de travail (bracelets, tapis anti-statiques, ...), alors que je cherche comment protéger les PCBs que je conçois (à plusieurs milliers d'euros le PCB, ça vaut la peine de bien le protéger).

- je trouve beaucoup d'introductions au sujet (notes d'applications de fabriquants, tutos introductifs, vidéos <2h, ...)

Mais pour l'instant, je n'ai pas réussi à identifier de ressources qui me permettraient de devenir compétent sur le sujet (je penses qu'il faudrait au minimum une formation de 2j, ou un livre/cours qui prennent 3-4 jours pu plus à étudier).

Est-ce que quelqu'un aurait une piste?

Merci d'avance

Tournoi mini sumos en Isère

Bonjour,

dans le forum bienvenue je parlais de l'atelier de robotique que j'anime en Isère et du tournoi qui aura lieu en avril 2023. Je vais développer un peu!

Je fais partie d'une association qui s'appelle Tic et Sciences. Nous faisons de la vulgarisation scientifique depuis plus de 10 ans: ateliers de rue, conférences, astronomie,...

Et depuis un peu moins longtemps nous avons une activité Arduino et robotique.

Ma participation au tournoi national de robots sumo a incité les copains/copines de l'assoce à proposer un tournoi dans le même esprit au printemps prochain. Nous avons donc actuellement les adhérents qui construisent chacun un mini sumo. Et la participation a dépassé nos espérances: une quinzaine de robots en cours de construction!

Les âges des constructeurs vont à peu près de 10/12 ans jusqu'à plus de 70 ans.

C'est la partie mécanique qui pose le plus de problèmes: avec le fablab local (LUZ'in) nous avons donc proposé un petit kit en contreplaqué de 5mm. Sur une plaque au format A4 une découpe laser a permis de proposer des pièces qui offrent beaucoup de possibilités d'assemblage. Donc les robots pourront être différents.

Pour la partie électronique nous proposons une carte avec des connecteurs qui supportera un Arduino Nano. Elle comprend aussi une alimentation 6 volts qui permet d'alimenter 2 servos à rotation continue.

Tout ceci permet une programmation assez simple et les robots commencent à rouler en avant, en arrière!

Prochaine étape, détecter la ligne blanche qui délimite le dohyo: certains mettront 1 seul capteur à réflexion, d'autres 2. Chacun fait selon ses envies et ses capacités. Idem pour les télémètres qui permettront de détecter l'adversaire.

Et il y aura donc le tournoi qui aura lieu le samedi 29 avril à La Tour du Pin. Tous nos robots ne seront probablement pas opérationnels à 100%, mais ce n'est pas grave, chacun aura appris beaucoup de choses.

Le tournoi est ouvert à tous, on devrait avoir quelques robots construits par des étudiants d'IUT et des particuliers venant d'autres régions sont intéressés. On espère suffisamment de participants pour pouvoir pérenniser ce concours et peut-être l'enrichir ensuite. Si vous pouvez nous aider à faire circuler, merci d'avance! :-))

La page de notre association qui permet de télécharger le règlement et quelques infos pour la programmation:

http://tic-et-sciences.org/?tournoi-de-robotique---robot-sumo

Voilà, je m'arrête le post est déjà long!

Je peux vous donner plus d'infos sur l'un ou l'autre point sans problème!

31 167 Views · 90 Replies ( Last reply by pmdd )

Naissance de mon Sumo

Bonjour

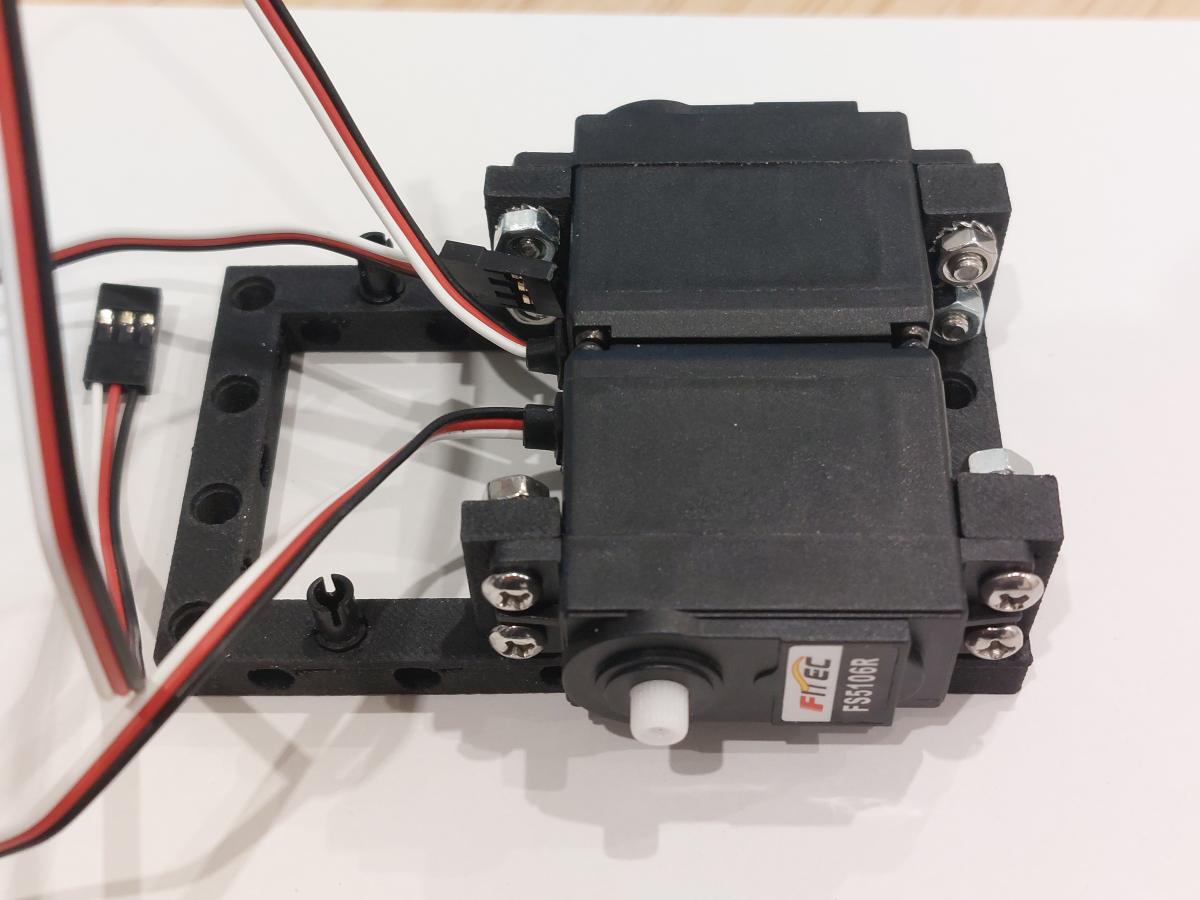

Dans la rubrique "Naissance de...", après mon quadrupède, je vous présente mon nouveau projet, toujours avec un concours en vue : la construction d'un mini sumo.

Ce n'est pas un projet très original mais il me convient dans la progression de ma formation pico/python. Par rapport au quadrupède il y aura plus de code et plus de capteurs.

Le mini sumo est très compact (10x10) et le terrain est très petit (diamètre 77 cm) . Un des avantages c'est que j'arrêterai de courir après mon robot, comme je fais depuis 6 mois avec Jag'Bot I

Je pense faire trois sumos:

* Un "basique", le premier pour me faire la main

* Un "costaud", solide sur ses roues

* Un "agile" que j'imagine avec des roues mécanum

Je pense aussi concevoir un système de déploiement, à voir.

Je retiens toujours le même principe, la fixation des moteurs/servos et de la batterie est un support imprimé 3D mais avec des trous compatibles Lego (diamètres et espacements) , ce qui me permet de construire par modules et de progresser petit à petit, quitte à coller la version finale.

Voici le point de départ :

33 188 Views · 244 Replies ( Last reply by pmdd )

Applications

Latest Discussions

-

Formation : conception de PCBs pour résister aux décharges ESD

Formation : conception de PCBs pour résister aux décharges ESDSandro - févr. 23 2026 06:26

-

2nd du concour Robot Sumo de la Tour du Pin 2025 (38)

2nd du concour Robot Sumo de la Tour du Pin 2025 (38)Justin - févr. 01 2026 02:25

-

[Avis Schéma] Robot 4WD sous Linorobot2 (Pico + RPi 4 + TB6612FNG)

[Avis Schéma] Robot 4WD sous Linorobot2 (Pico + RPi 4 + TB6612FNG)Pickthor - janv. 26 2026 10:47

-

tressage avec 2 moteurs pas à pas

tressage avec 2 moteurs pas à pascook - janv. 13 2026 06:16

-

[Mecapi] Projets scientifiques et plans de communication

[Mecapi] Projets scientifiques et plans de communicationGeoffroy - janv. 02 2026 09:47

Statistiques de la communauté

- Total des messages

- 114 504

- Total des membres

- 5 017

- Dernier membre

- garfield2142

- Record de connectés simultanés

- 15 890

10 nov. 2025